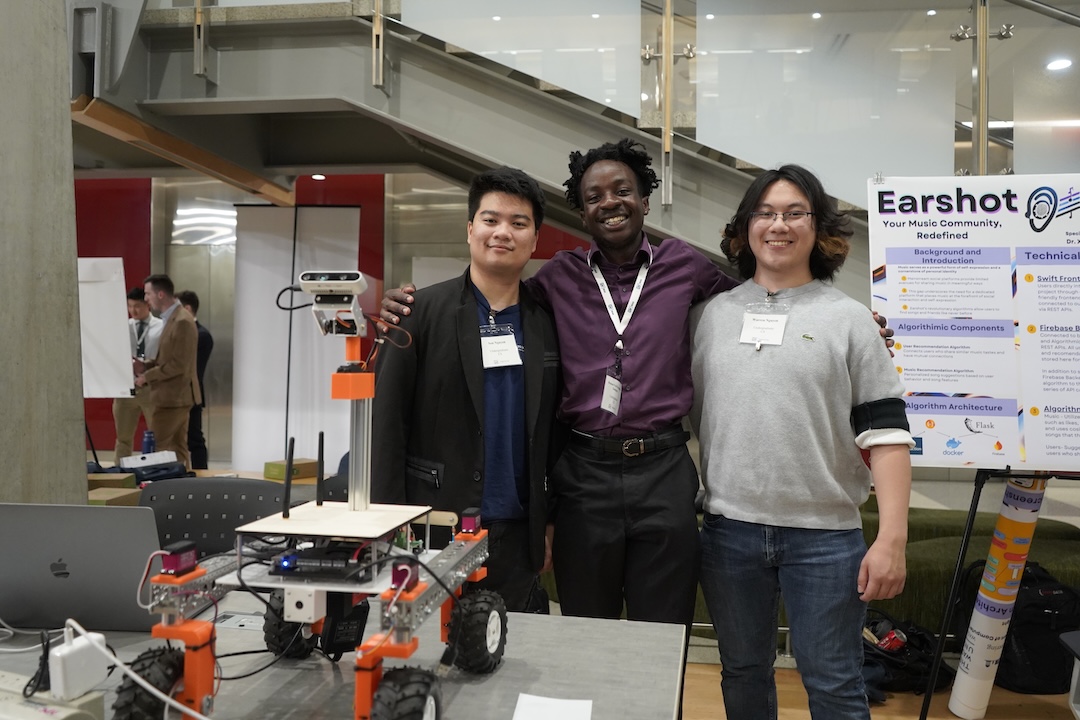

Downward Facing Visual Odometry

Project Team

Son Nguyen

Warren Nguyen

Renaud Fred Noubieptie

Project Sponsor

Ivan Galysh

Project Mentor

Dennis Afanasev

Instructors

Dr. Tim Wood, CS, GW Engineering

Xiaodong Qu, CS, GW Engineering

Position and velocity tracking with conventional methods introduce problems related to precision, expenses, and reliability. GPS only works with a clear view of the sky and has large margins of errors. Reliable Inertial Measurement Units (IMU) are expensive and unviable for commercial usage. Wheel odometry can be unreliable due to wheel slipping. Visual odometry is cheaper and can account for these small margins, but conventional forward facing cameras suffer from dynamically changing environments. And the algorithms that account for these dynamics are computationally expensive. Our downward facing monocular camera seeks to rectify this issue by utilizing the downward terrain to reduce the dynamic environment while reducing costs both in monetary and computational expenses.

Who experiences the problem?

There are many environments that demand the use of autonomous vehicles beyond the range of GPS satellites. These include exploratory vehicles atop other planets such as those employed by NASA. In addition, vehicles that operate within the confines of a single building such as cleaning robots or restaurant waiter service robots all exist within GPS restricted environments.

Why is it important?

The real world is dynamic and constantly changing. As such, any autonomous vehicle must be able to respond rapidly to changing environments. This timing requirement means that data provided to the vehicle needs to be delivered quickly and reliably. Our system seeks to provide a solution to this problem that is cheap to use.

What is the coolest thing about your project?

In order to obtain odometry data from images, one must analyze images in order to perform feature extraction, then perform optical flow to trace the features. Ultimately, motion estimation must be done on identified differences between two images. This motion estimation needs to be output of both distance traveled and trajectory (in terms of direction traveled), and must be done at a high enough speed to return useful data.

What specific technical problems did you encounter?

Performing motion estimation on provided data in order to obtain accurate results was no easy task. This requires the implementation of a complex algorithm, and calibrating the resulting data to fit the real world results, including identifying and correcting noisy data.